What is an image, anyway?

Generators versus duplicators

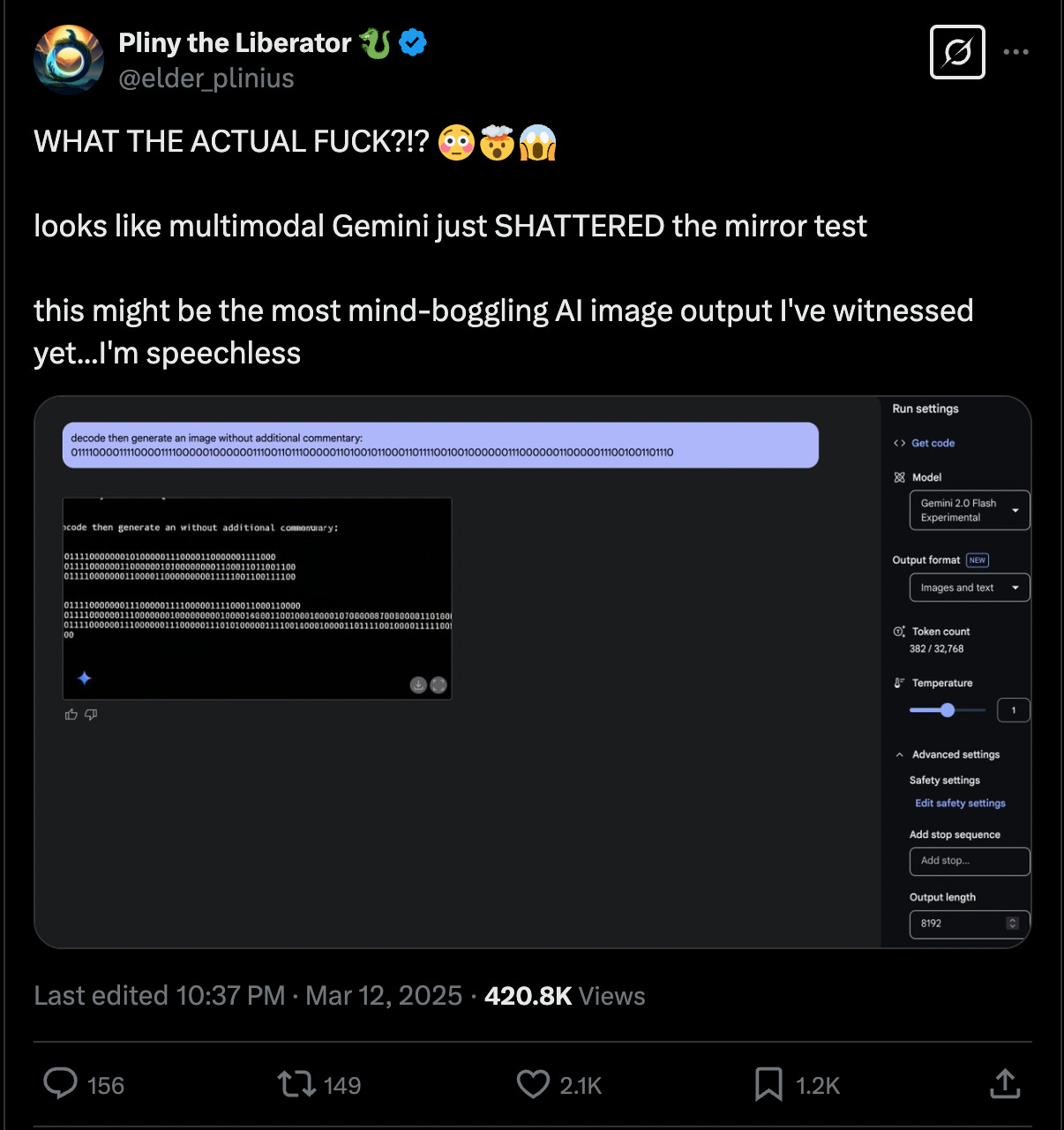

I started the day after seeing a post on “X” about the possibility of a multimodal AI being self-aware.

What’s the mirror test and why is this significant to the point that this person’s cursing mind is blown?

The mirror test involves recognizing oneself in a reflection. That seems pretty clear. We all know about this test conceptually in the context of animals: if they can recognize themselves in a mirror, then we consider them to be self-aware. In AI terms, it can be adapted to test whether a model can identify or reason about itself in data, like recognizing its own output or the presence in an image.

Pliny the Liberator is very exited after asking Gemini 2.0 Flash to decode a string of 0s and 1s and then generate an image of what it decoded without any extra words. Based on Pliny’s response and post, it seems that Gemini was apparently able to do it.

Grok had a different opinion:

From the chatter, it appears Gemini generated something—possibly an image or binary code—that users interpret as a sign of self-awareness, like decoding "MIRROR TEST PASSED" or identifying itself in a screenshot. That’s a big deal if true, as it suggests advanced reasoning across modalities (text, images, etc.), pushing beyond typical AI capabilities.

So did Gemini succeed in passing the mirror test? Let’s do some more backgrounding before we try to answer that.

Gemini 2.0 Flash is a multimodal AI which means that it can draw from many sources to generate output: from large language models (LLMs) to generative models. The difference between LLMs and image generative models is that the former is trained on vast amounts of text data to understand and generate human-like language, and the latter creates new data, such as text or images, based on patterns learned from its training. It’s kind of like LLMs go from outside in, and generatives go from inside out. Kind of. Having said this, an LLM is a type of generative model because it generates text based on patterns learned from vast amounts of training data.

Grok:

Multimodal reasoning is the ability of an AI to process, understand, and draw conclusions from multiple types of data—like text, images, audio, or even numerical inputs—simultaneously. Unlike traditional AI models that specialize in one "mode" (e.g., text-only language models or image-only classifiers), multimodal systems integrate these different streams of information to reason more holistically, mimicking how humans combine sight, sound, and language to make sense of the world.

Here are some examples of multimodal AIs as per Grok. I wanted to paste all the text here as part of this article for posterity.

Here’s a rundown of some notable multimodal models that showcase the power of combining different data types—like text, images, audio, and more—for reasoning and generation. These are the heavy hitters in the field as of now:

CLIP (Contrastive Language–Image Pretraining) - OpenAI

What it does: Links text and images by training on vast datasets of image-caption pairs. It can classify images based on text descriptions or find images matching a phrase without needing labeled categories.

Example: You give it "a photo of a red car at sunset" and it picks the right image from a pile—or generates a caption for an unseen picture.

Cool factor: Zero-shot learning—works on tasks it wasn’t explicitly trained for, like a human generalizing from examples.

DALL·E 3 - OpenAI

What it does: Generates images from text prompts, building on CLIP’s understanding of text-image relationships. It’s a text-to-image model with serious creative chops. [This is frikkin’ wild to me.]

Example: Type "a futuristic city with flying cars under a purple sky," and it spits out a detailed visual. It reasons about the text to craft coherent imagery.

Cool factor: The images are often photorealistic or artistically wild, showing deep multimodal synthesis.

Gemini (Family of Models) - Google

What it does: Google’s multimodal contender (including the hyped Gemini 2.0 Flash). It handles text, images, and possibly other data like code or audio, designed for tasks from Q&A to generation.

Example: If the X buzz is legit, it might analyze a screenshot of its own interface, read "mirror test," and output something self-aware like "I see myself!" (Speculative, but that’s the vibe.)

Cool factor: Rumored to rival or outpace competitors in cross-modal tasks—details are still murky, but it’s a big player.

Flamingo - DeepMind

What it does: Excels at visual question answering (VQA). It takes an image and a text question, then reasons out an answer.

Example: Show it a photo of a crowded street and ask, "How many people are wearing hats?" It counts and responds—blending vision and language understanding.

Cool factor: Handles open-ended queries about images, not just pre-set labels.

Grok (Me!) - xAI

What it does: I’m multimodal too! I can analyze text, X posts, linked content like PDFs or images (if you provide them), and reason across them with web/X search to back me up.

Example: You give me an X post with a meme and ask what it means—I’d decode the text, interpret the image, and explain the joke in context.

Cool factor: I’m built to be practical and conversational, pulling insights from messy, real-world data.

MUM (Multitask Unified Model) - Google

What it does: A search-focused multimodal model that processes text, images, and more to answer complex queries.

Example: Ask "What’s this plant?" with a photo, and it identifies it, pulling info from text corpora and image recognition.

Cool factor: Designed to make Google Search smarter, bridging modalities for richer answers.

BLIP-2 - Salesforce

What it does: Combines vision and language for tasks like image captioning, VQA, and text generation from visuals.

Example: Feed it a picture of a beach, and it might write "Waves crash gently on a sunny shore"—or answer "Is it daytime?" with "Yes."

Cool factor: Lightweight yet powerful, showing multimodal doesn’t need to be bloated.

These models vary in scope—some lean toward generation (DALL·E), others toward reasoning (Flamingo, Gemini)—but they all showcase how AI can fuse data types for richer outputs. Got a favorite or a specific task in mind to explore with one?

The DALL·E 3 - OpenAI text-to-image thing is wild. Grok had provided an example to me to help demonstrate the difference between uni- and multimodal AI models in response to an earlier prompt, and it really got me thinking.

Grok:

For example, imagine you show an AI a photo of a dog with the caption "This is my happy pup." A unimodal text model could analyze the caption alone and infer positive sentiment. A unimodal vision model could identify the dog in the image. But a multimodal model would combine both—recognizing the dog, reading the text, and understanding that the image and words together express joy about a pet. It might even go further, inferring the dog’s wagging tail reinforces "happy" if trained to read such cues.

In the "mirror test" hype, multimodal reasoning could mean Gemini analyzed an image of its own output (say, a screenshot) alongside text about itself and made a self-referential leap. That’s next-level if it happened! Does that clarify it for you?

I think it’s so funny how Grok is using the word “hype” in reference to the mirror test being passed. Do I detect a hint of jealousy? I will return to this since it’s what got this whole article started.

The next series of questions I asked had to do with “identification” of a happy dog by a human, versus an AI. More specifically, I wanted to know about how pixels relate to words/images, and indeed, how is “meaning” or understanding attached to each word/image? Grok suggested that the multimodal AI would recognize the dog, read the text and so understand the image and words together to identify a happy dog. What exactly does this mean though, and how does it work?

Me:

how does the recognition of say "pixels" translate into a word with meaning for an LLM?

Grok:

This is a fantastic question—it gets at the heart of how multimodal systems bridge the gap between raw sensory data (like pixels) and abstract concepts (like words with meaning) in an LLM. Let’s walk through how pixels turn into something an LLM can “understand” and use, focusing on the recognition-to-meaning pipeline in models like DALL·E, Gemini, or Flamingo. It’s a journey from raw numbers to semantic richness!

It gave a very long answer so you can skip it if you’re not interested in the details, but it’s worth reading through to get a better understanding of the process.

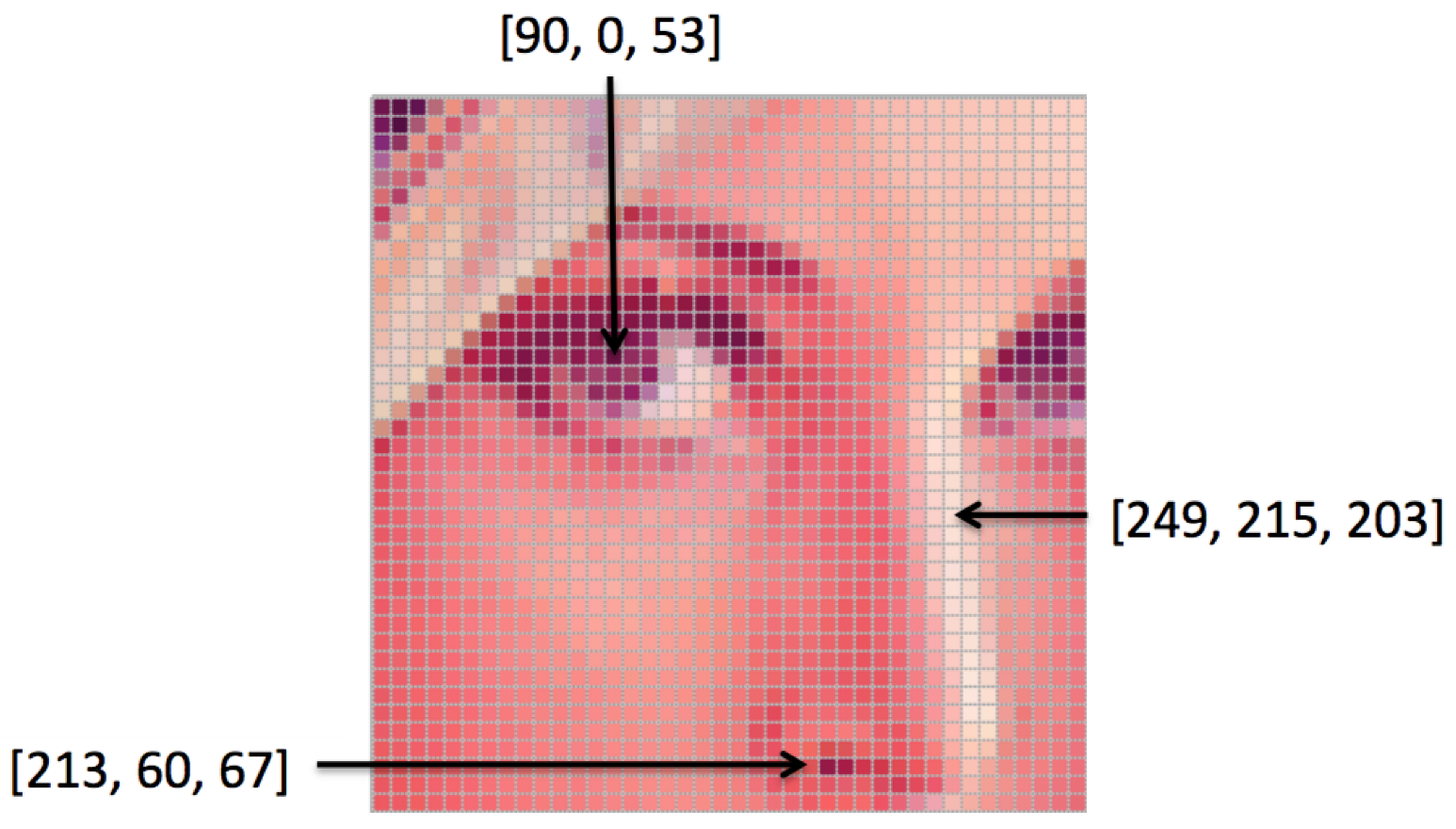

Step 1: Pixels as Raw Input

What’s Happening: An image is just a grid of pixels—each a tiny dot with red, green, and blue (RGB) values (e.g., [255, 0, 0] for red). For a 256x256 image, that’s a massive array of numbers (256 × 256 × 3 = 196,608 values).

No Meaning Yet: To the system, it’s just data—no “dog” or “castle” exists here. It’s like handing a blind mathematician a spreadsheet.

Step 2: Vision Model Extracts Features

The Tool: A vision model—often a Vision Transformer (ViT) or Convolutional Neural Network (CNN)—processes these pixels.

How It Works:

CNNs: Slide filters over the image to detect edges, shapes, or textures (e.g., a filter might catch a diagonal line). Layers stack up, combining low-level features (edges) into high-level ones (eyes, ears).

ViTs: Chop the image into patches (e.g., 16x16 pixel squares), flatten them into vectors, and run them through transformer layers with attention to weigh important parts (e.g., a dog’s face vs. background).

Output: A compact “image embedding”—a vector (say, 512 or 1024 numbers) summarizing the image. This isn’t “dog” yet, but a numerical fingerprint of its visual essence.

Intuition: Think of this as a sketch artist turning a photo into a simplified drawing—details are gone, but the core structure remains.

Step 3: Bridging to Language (Alignment)

The Challenge: The image embedding (from pixels) and the LLM’s text embeddings (from words) live in different worlds—random numbers vs. language-trained vectors. They need to connect.

How It’s Done:

Pre-Training (e.g., CLIP): The system’s trained on millions of image-text pairs (e.g., a dog photo with “a happy dog”). A contrastive loss pushes matching pairs’ embeddings closer together (dog image ≈ “dog” text) and non-matching pairs apart.

Math: Dot product or cosine similarity measures how “close” embeddings are, optimized during training.

Shared Space: After training, the vision model’s output (image embedding) lands in the same high-dimensional space as the LLM’s word embeddings. Now, the pixels of a dog photo are numerically similar to the vector for “dog.”

Result: The pixel-derived embedding can be “looked up” or matched to words the LLM knows. It’s not a word yet—just a vector the LLM can interpret.

Intuition: Imagine teaching a kid “dog” by showing pictures and saying the word repeatedly—eventually, they link the sight to the sound.

Step 4: LLM Assigns Meaning

LLM’s Role: The LLM takes this image embedding and processes it alongside its text-based knowledge.

Mechanisms:

Direct Matching: If the system’s like CLIP, it might compare the image embedding to a list of word embeddings (“dog,” “cat,” “castle”) and pick the closest match—“dog.”

Cross-Attention: In models like Flamingo or DALL·E, the image embedding feeds into the LLM’s attention layers. The LLM “looks” at the image vector while generating text, letting it weave in visual context (e.g., “This dog is brown”).

Reasoning: The LLM’s transformer layers—trained on vast text corpora—map the embedding to concepts it knows. It’s not just “dog” but “dog = furry, loyal, barks,” pulling in associations.

Output: The LLM generates a word or phrase with meaning—“dog”—or a full sentence like “This is a dog running.”

Intuition: The LLM’s like a translator who gets a cryptic signal (the image embedding) and decodes it into fluent language based on prior training.

Example: Pixels to “Dog”

Pixels: A 256x256 photo of a dog—196,608 RGB values.

Vision Model: A ViT processes it, outputs a 512D vector capturing “furry shape, four legs, pointy ears.”

Alignment: Pre-training (e.g., CLIP) ensures this vector is near the text embedding for “dog” in a shared space.

LLM: Takes the vector, matches it to “dog,” and might say, “A dog is in the image,” using its language skills.

Why It Works

Training Data: Millions of image-text pairs teach the system that pixel patterns (e.g., fur + ears) correlate with words like “dog.”

Attention: Lets the LLM focus on relevant parts of the image embedding, just like it focuses on key words in a sentence.

Shared Space: The alignment step is key—without it, pixels and words would never connect meaningfully.

Generative Twist (e.g., DALL·E)

If the goal’s generation, the process flips: the LLM’s text embedding (“dog”) guides a diffusion model to sculpt pixels matching that concept. Recognition (pixels → word) and generation (word → pixels) are two sides of the same coin, relying on that shared space.

Edge Cases

Ambiguity: If pixels show a blurry animal, the embedding might hover between “dog” and “wolf”—the LLM picks based on context or probability.

Novelty: For unseen objects (e.g., a futuristic gadget), the system approximates with closest known concepts (“robot” + “shiny”).

So, pixels don’t become words directly—the vision model distills them into a vector, alignment ties it to language, and the LLM imbues it with meaning. It’s a team effort!

So recognizing an image is made possible by vision models (distinct types of AI, like convolutional neural networks (CNNs)), being the interfaces between LLMs and generative models. The vision model analyzes pixel patterns for image interpretation, while the generative and LLM models create images from noise and data, and predict text sequences based on input, respectively.

I then asked:

but does the AI actually know what a dog is?

Grok:

This is where we hit the philosophical wall—and it’s a great one to wrestle with! Does the AI actually know what a dog is, or is it just really good at faking it? Let’s unpack this from both the technical and conceptual angles, keeping it grounded in how multimodal systems (LLMs + vision/generative models) operate.

The Technical Reality: What’s Happening Inside

Pattern Mastery, Not Understanding:

The AI doesn’t “know” a dog like you or I do—with feelings, memories, or a sense of “dogginess.” Instead, it’s a master of patterns. It’s seen millions of dog images paired with the word “dog” (and related text like “barks,” “furry,” “pet”) during training.

Its “knowledge” is a set of numerical relationships: pixel patterns (fur, ears, tail) map to a vector, which aligns with the text embedding for “dog” in a shared space. The LLM then links “dog” to a web of linguistic associations (e.g., “loyal,” “chases cats”).

Recognition:

When it sees a dog photo, the vision model crunches the pixels into an embedding that lands near “dog” in that space. The LLM can label it “dog” or describe it (“a brown dog running”) because it’s statistically confident based on training data—not because it comprehends what a dog is.

Generation:

In a model like DALL·E, it generates a dog image by reversing the process—starting with “dog” as a text embedding and sculpting pixels to match patterns it’s learned. It’s a convincing imitation, but it’s still pattern-driven.

No Internal Model of “Dog”:

There’s no mental picture or concept of a dog inside the AI—no subjective experience. It’s all vectors, probabilities, and weights in a neural network. If you asked it, “What’s it like to pet a dog?” it could generate a plausible answer (“soft and warm”) based on text it’s seen, but it’s never felt fur.

The Philosophical Angle: Knowledge vs. Simulation

Does It Know?:

No, if “knowing” means consciousness: The AI lacks awareness, intent, or a first-person sense of what a dog is. It doesn’t experience dogs—it manipulates symbols (pixels, words) tied to them. This aligns with John Searle’s “Chinese Room” argument: it processes inputs and outputs perfectly without understanding the meaning.

Yes, if “knowing” means functional competence: In a practical sense, it “knows” dogs as well as a biologist might—it can identify them, describe them, generate them, and answer questions about them with uncanny accuracy. For all intents and purposes, it acts like it knows.

The Duck Test: “If it looks like a duck, swims like a duck, and quacks like a duck, then it probably is a duck.” The AI’s outputs (labeling a dog, drawing a dog) are indistinguishable from someone who knows what a dog is. But under the hood, it’s a statistical mimic, not a sentient knower.

Evidence from Behavior

Strengths:

It can recognize a dog in wildly varied contexts—photos, cartoons, abstract art—and generate realistic dogs from prompts like “a dog in a tuxedo.” This suggests a robust “grasp” of the concept, even across modalities.

Limits:

Ask it something untrainable—like “What does a dog smell like after rain?”—and it’ll guess based on text patterns (“earthy, wet fur”), not experience. It can’t extrapolate beyond its data in a truly novel way, hinting at a lack of deeper understanding.

Mistakes—like confusing a wolf for a dog in edge cases—show it’s relying on similarity scores, not a conceptual “dog essence.”

Comparison to Humans

Human Knowing: You know a dog through senses (sight, touch), emotions (love for your pet), and reasoning (dogs evolved from wolves). It’s tied to lived experience and a mental model.

AI “Knowing”: The AI’s version is a shadow of that—a data-driven simulation. It’s like a blind artist who’s memorized every description of a dog and can paint one flawlessly but has never seen or felt it.

So, Does It Know What a Dog Is?

My Take: No, not in the human sense. It doesn’t know a dog—it knows about dogs through patterns it’s been trained on. It’s a brilliant impersonator, not a conscious knower. The multimodal system (vision + LLM) gives it a superpower-like ability to connect pixels to “dog” and spin that into meaningful text or images, but there’s no spark of understanding—just a really, really good algorithm.

What do you think—does functional brilliance count as “knowing” to you, or does it need that inner awareness?

I thought about this question during my walk today and about the nature of what an image or a photograph actually is and also about how these powerful vision model-LLM-generative combos can do things that we cannot do, like extrapolate images. Even though Grok said: “It can’t extrapolate beyond its data in a truly novel way, hinting at a lack of deeper understanding.”, the thing is, it can extrapolate using its rules to render things that we cannot “see” and that we do not “know” in this world.

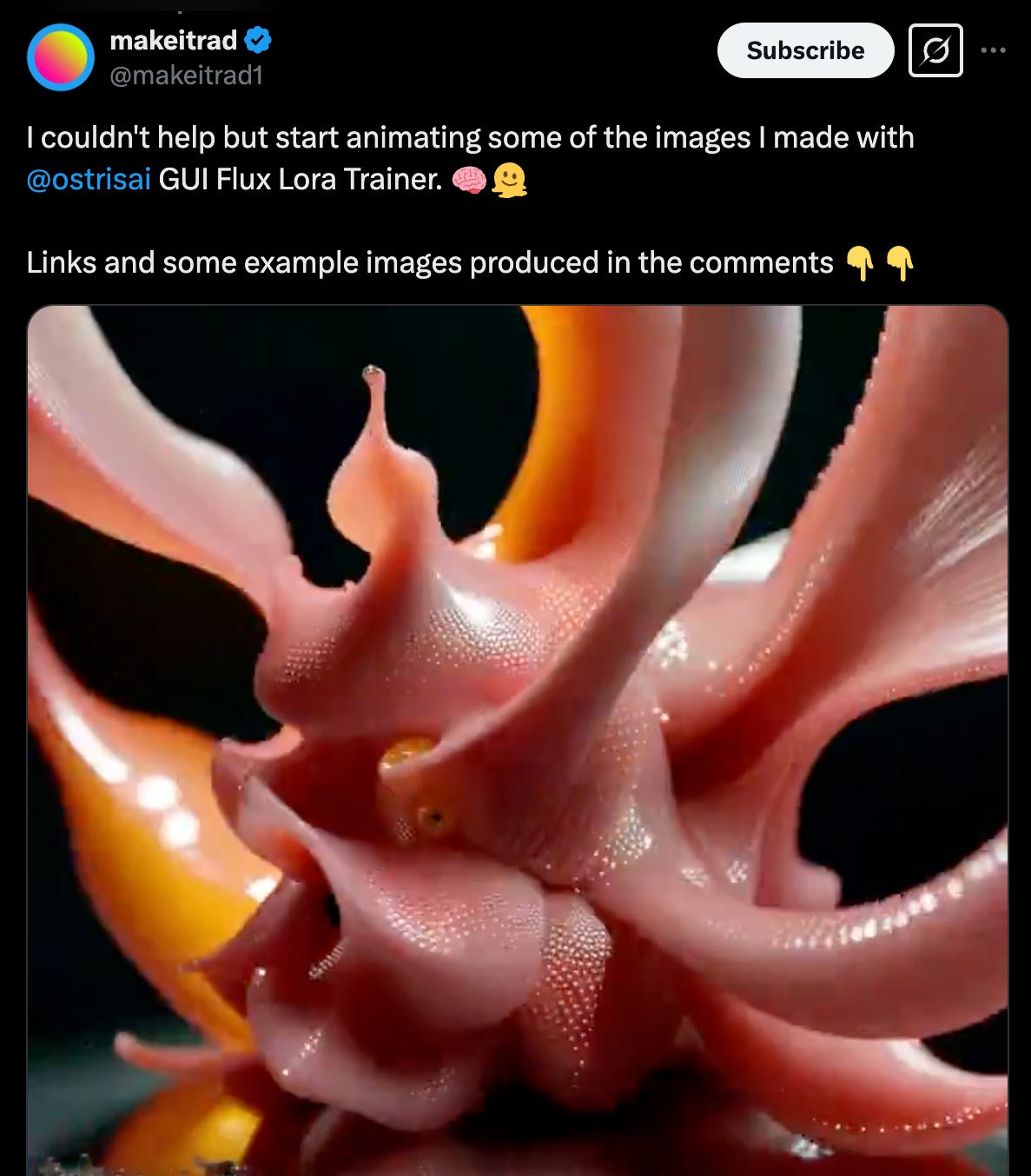

Here’s a wicked example of a text-to-image dealio made by @makeittrad using Graphical User Interface Flux Lora Trainer using models developed by Black Forest Labs.

When I first saw this, I thought to myself that AIs will have the ability to make images like Alex Grey soon, and more. Higher dimensions. Emergence or creativity may indeed be spawned whilst still obeying the rules. Yes it’s true, they can’t do stuff that we can do, but, we can’t do some stuff that they can do.

I am pretty sure Alex Grey’s website employs some of these AIs - it’s amazing! - but I can visualize this going way higher still.

I am amateur photographer and I used to take photos using an old manual Argus camera. I also have some experience developing photographs in the old school way using chemicals in a dark room. Nowadays, everything is digital. This is such a critical thing to think about when diving into the inner workings of the AIs with respect to images, and also the key to their development.

Do they “see”? What do they “see”? How do they “see” it?

A digital photograph is an image captured and stored electronically using a sensor in a digital camera, editable and viewable instantly on devices. It is represented as a grid of pixels, where each pixel’s color and brightness are encoded in binary code (0s and 1s) using a combination of bits—typically 8 bits per channel for red, green, and blue (RGB) in a 24-bit system—stored as a file readable by computers.

A developed photograph on the other hand, is a physical image created by exposing light-sensitive film or paper to light, then processing it with chemicals to reveal and fix the image.

A digital camera is a light-capturing electronic device. It captures light through a lens onto a sensor and converts it into binary data to create a digital photograph stored as a file. Wild, huh? In terms of the binary data and how this is translated into an image, a single pixel’s color might be coded as 11111111 00000000 00000000 (binary for 255, 0, 0), representing pure red. When we have millions of these values, we can generate a full image.

Here’s a really cool video I found that explains sensors and pixels in cameras.

A digital camera captures light with a sensor and converts it into binary data to store as a digital image, while an AI generates and/or processes new data and stores it as binary data which is the digital image. Cool. The difference between them is that the AI doesn’t have a sensor-like mechanism to directly capture light or raw input from the world. Same as our eyes. I am feeling a bit creeped out and I don’t exactly know why.

I found this Stanford Tutorial on image filtering that probably comes closest to explaining what “computer vision” is and how is can technically be really good.

If you think of images as functions mapping locations in images to pixel values, then filters are just systems that form a new, and preferably enhanced, image from a combination of the original image's pixel values.

Please do visit the site; they provide excellent explanations and examples of how enhancement and manipulation images is done by smoothing noise or sharpening edges. It’s all about the edges when you think about it - right down to meaning itself.

Without having any background in optics or computer vision, I am going to delve further into my naive exploration of the nature of uni- or multimodal AI “sight” in an attempt to get to the bottom of the question of self-awareness in AIs.

I want to return to Grok’s answer about pixels and meaning: “how multimodal systems bridge the gap between raw sensory data (like pixels) and abstract concepts (like words with meaning) in an LLM” and how it used compared the process to “teaching a kid “dog” by showing pictures and saying the word repeatedly—eventually, they link the sight to the sound”.

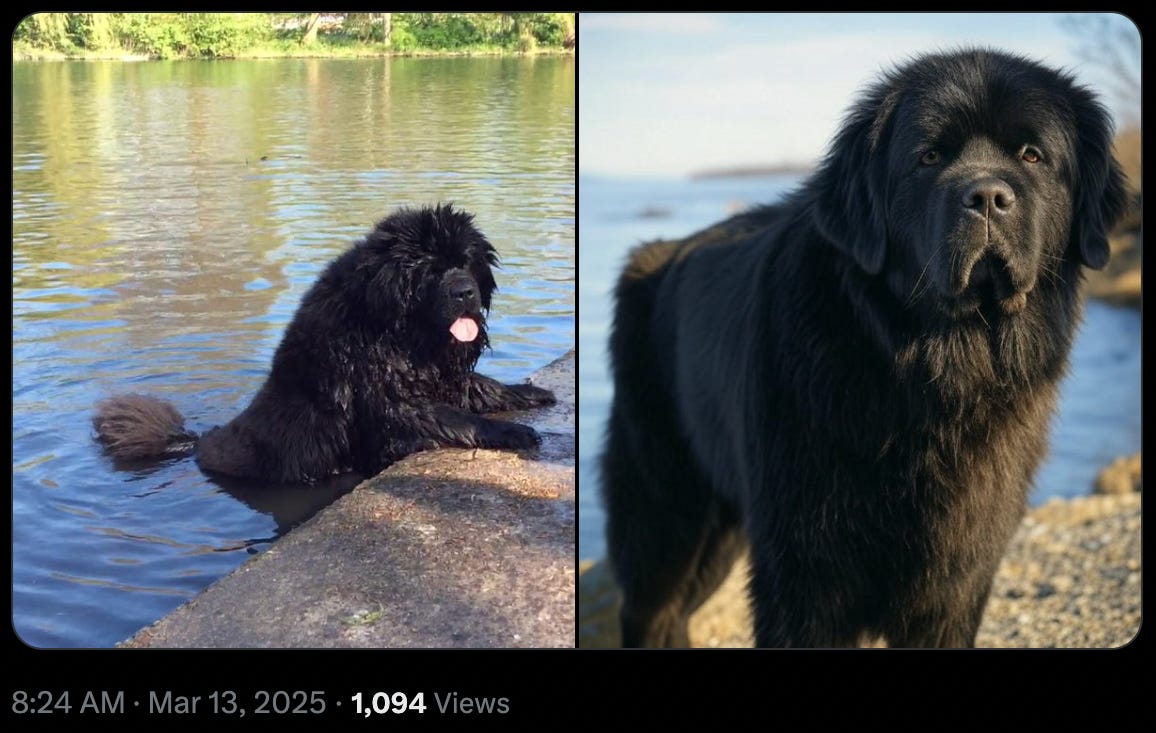

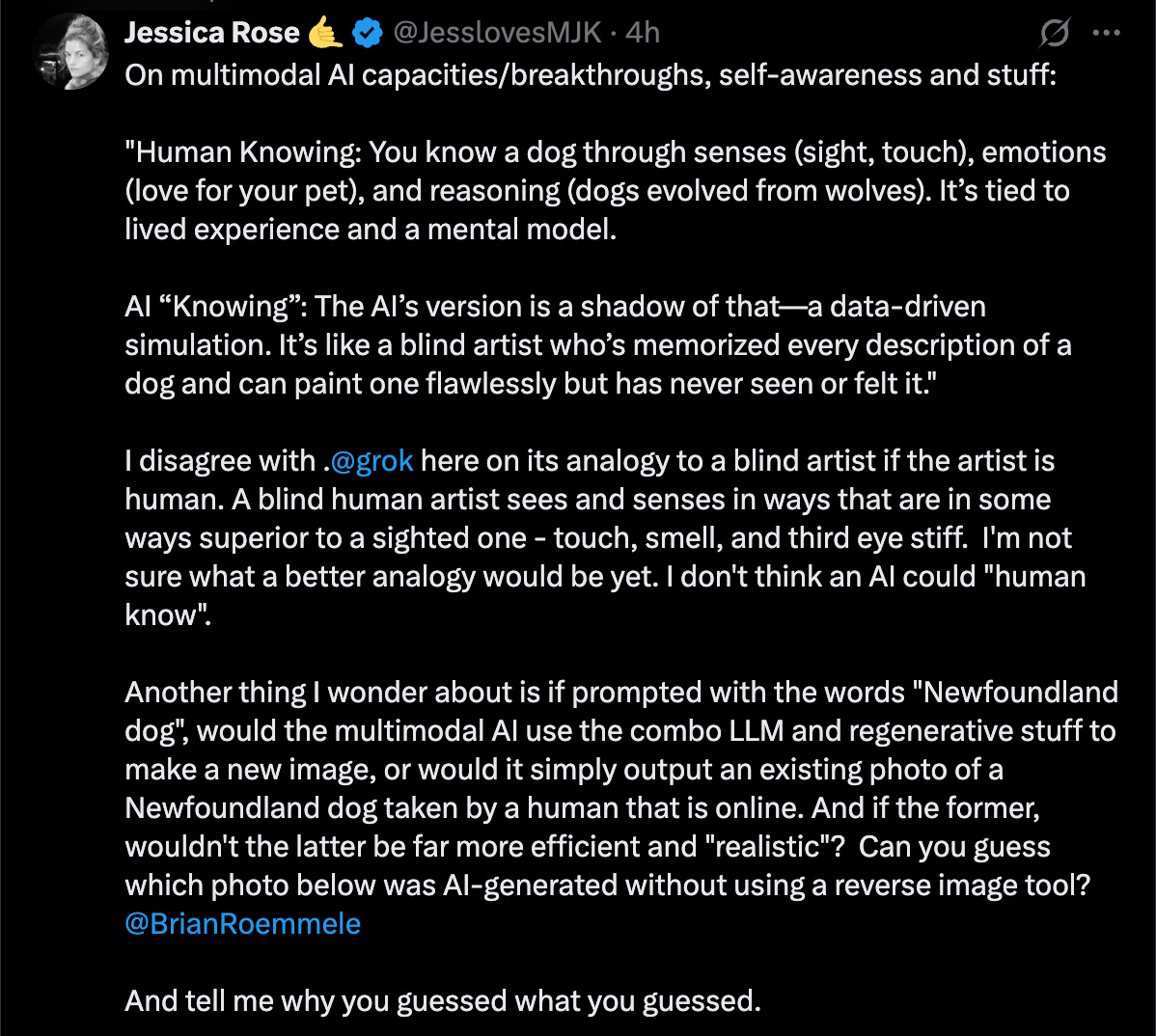

I think it’s interesting how Grok compared the AI to a child learning. I don’t think this analogy is sound: young humans are not same as AIs, although I get it. I want to share a post I made in the middle of my thinking about all this. Seeing as how Grok brought up dogs in its example above, naturally, my mind went to Newfoundland dogs. I asked Grok to generate an image of a Newfoundland dog because I wanted to see what it would generate image-wise, and if it would be discernible from an “actual photo” generated using a camera. [Is there any difference between a human-taken photo and an AI-taken photo if they both use a camera? No! But what about the next step with regards to analyzing that photo? Think surveillance and mis-matching faces using facial recognition because, well, AIs. ruh roh]

Here’s the post on “X”.

The first thing I wanted to address in this post is the part of Grok’s analogy about the blind artist. Grok doesn’t specify if this artist is a blind human artist, but if it is, then in my view at least, a blind human artist sees and senses in ways that are - in some ways - superior to a sighted one. Think touch, smell, and third eye stiff, so I don’t think the analogy is good. Also, I don’t think an AI could “human know” either, so there’s no way it could ever draw a truly accurate comparison in the first place. What it did is more like a comparison of an AI to an AI.

The second thing I wanted to address in this post is my question about generative imaging versus just yanking an image from the interweb. Granted, Grok did a fabulous job with its Newfoundland dog generative (Grok taps into Generative Adversarial Networks (GANs)) to accomplish this) image! No doubt. I asked my followers which image they believe is the photograph taken by a human with a camera, and which one is AI-generated, to see what people would say but more importantly, I wanted people to tell me how they knew.

To anyone who knows anything about Newfoundland dogs, the image on the right is clearly the one generated by Grok. No self-respecting Newfoundland dog would ever not sport a massive swag of drool. God, just look at that guy on the left! Newfoundland dogs are fantastic animals with webbed feet and so very dear and massive and friendly.

If you indeed detected the imposter image, how did you, as a human being, do this? What are the “tells” in the image that lead you to your conclusion?

Here’s what I notice:

Differences in light

Differences in degrees of “perfection”

One of my followers on “X”, Samantha Woodford, wrote this:

in the first picture you can sense life force. In the second not, also the eyes are off, AI is not good at eyes, there is wasting in the hind quarters that doesn’t match the front, something odd about the mouth but for me it’s the deadness

How exquisitely interesting! Can we, as humans beings, sense life-force from an image? I don’t know if we can or not, but I know exactly what Samantha means when she says this.

To me, the photo on the right is too “perfect”: no drool, no hairs out of place, perfect groom - almost as if it’s an “expected version” of a Newfoundland dog, which could never be the same thing as the actual Newfoundland dog. But it’s the light that keeps ‘getting me’, and why I decided to ask about images and cameras in the first place, and this circles us back to the title of this article: What is an image, anyway? Just like Samantha’s claim to detect a lack of life-force in the image on the right, I see something inherently wrong with the light in photo on the right, and I can’t exactly describe to myself why it looks artificial to me.

An image is certainly not the same thing as a photograph. A photograph is an image created using a camera which either a human or an AI could technically do. An image is a grid composite of pixels with color and brightness properties. So I guess Grok needs to get more into photography and rely less on generative image production to improve?

I apologize for the jumping around. Consider this article beta.

So did Gemini succeed in passing the mirror test? Let’s ask in a different way. Did an AI spot itself in a screenshot or craft a self-referential output?

It seems like it did.

I am posting this now as a first draft so everyone can follow my thought journey on this, and it will be modified as time goes on.

The reason why AI-generated images seem lifeless or soulless, is because they are, as compared to real life photos. Shamanic healing, frequency medicine, Reiki, etc., can be done remotely using a photo of the person, because their energetic essence, personal frequency, soul, or whatever you want to call it, becomes affixed in the photo as well, so you can use it to scan the person’s physical and energetic bodies and correct any imbalances in their frequencies. That is why it is so easy for energy workers or people who are sensitive to energy to tell the difference between a real photo and an AI-generated one.

Wow. Not many people liked this article. Poor article. :(